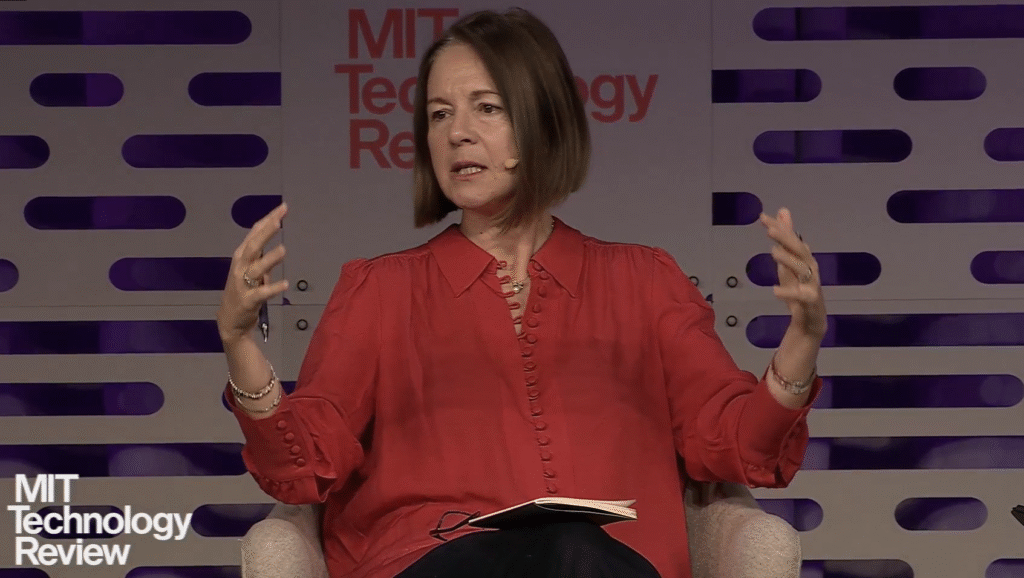

EmTechMIT, Cambridge, MA: The rapidly evolving landscape of artificial intelligence, the algorithms that define it, the national borders that increasingly limit it, and the controls industries and governments seek to assert, stood front and center during this session. “The ABC’s of AI: Algorithms, Borders, Controls,” led by R. David Edelman of MIT and Fiona Murray of MIT Sloan, offered a frank diagnosis of the emerging dynamics of digital sovereignty, technical innovation, and the global stakes of AI leadership.

Algorithms: From Black Boxes to Building Blocks

Edelman kicked off the conversation by zeroing in on the layers of technology, algorithms, hardware, data, that organizations struggle to control. Today, he noted, the greatest anxiety hovers around algorithms, especially the high-stakes models deployed in pivotal sectors. “Companies,” Edelman observed, “are underappreciating the reality that AI, particularly generative AI, is increasingly modular. With one line of code, they can swap out the underlying model. They can use OpenAI, they can use an open-source model. The infrastructure now enables control and experimentation, even for the most basic models.”

This means businesses, large and small, aren’t necessarily beholden to a single vendor or foundational model, a prospect that simultaneously excites and terrifies both enterprise leaders and policy makers. Edelman explained: “The real trend is recognizing the limitations. Today, companies aren’t just using out-of-the-box enterprise scripts. There’s a drive for deeper, domain-specific fine-tuning, on proprietary data. That opens new priorities and new sensitivities, especially when data becomes a matter of government concern.”

The modularity of modern AI models thus becomes a double-edged sword: enabling agility and experimentation, while also making it harder to assert durable standards, establish trust, and manage risk as algorithms change and swap with increasing frequency.

Borders: Digital Sovereignty & Geopolitical Anxiety

If algorithms represent the new digital “DNA,” then borders are increasingly the cell walls, sometimes rigid, sometimes permeable, always pressurized by political and economic imperatives. The session made it clear that geopolitical anxiety is now a defining element of AI’s global narrative.

Edelman provided a candid assessment: “There’s a bit of magical thinking happening among governments. The idea that you can keep generalized large models or cloud-based inference ‘within sovereign borders’ is, functionally, impossible and technically inaccurate.” Governments may mandate data localization, requiring, for instance, that citizen data or model training occurs on national soil, but the technical underpinnings (cloud networks, globally dispersed R&D, distributed inference) make strict border enforcement highly challenging.

Murray expanded on the infrastructure complexities underpinning these ambitions. “There’s a common notion that data centers, being big and physical, are easier to control. But when you think about chips, cooling, connectivity, and, critically, the supply chain, it becomes clear very few countries can truly execute sovereign AI at scale,” Murray said. The global semiconductor supply chain is especially fraught: “The vast majority of chips ultimately come from a handful of companies, often outside the country trying to assert sovereignty,” she explained.

Edelman also illuminated the economic and political drivers behind sovereign AI pushes: “A lot of national mandates are infrastructure investment plans in national security clothing. Governments want data centers because they build local capacity and look good in election campaigns, but at what real cost? It’s often economically and environmentally unsustainable.”

Controls: Who Commands the AI Future?

A recurring theme in the conversation was who, and what, truly controls the AI future. Is it governments, multinationals, major cloud platforms, or the open-source community?

“Much of what governments claim about the need for sovereignty is posturing,” Edelman argued, “and in practice, technical control remains elusive.” Instead, we’re witnessing a global “arms race” in AI capacity and infrastructure between major economies, most notably, the US and China. “The anxiety level among policy officials on both sides is as high as anything I’ve seen in 20-plus years. Both fear the other will unlock a leap, tenfold, a thousandfold, in AI capability or efficiency, and that animates everything from regulation to strategic investments.”

Yet both speakers expressed skepticism about “third party” actors, such as the UAE, which has poured capital into becoming a Middle East AI powerhouse, playing a meaningful long-term role without addressing the technical and environmental realities of massive AI compute infrastructure: “AI has a cooling problem, and places with limited access to clean, reliable power are fundamentally disadvantaged,” Edelman warned.

Collaboration and Competition: The Paradox of AI Progress

A surprising point, given the competitive framing of US-China AI rivalry, was Edelman’s assertion of a “mutualistic” relationship at the bleeding edge of research: “Despite all the rhetoric, the best research is still posted to arXiv and is fundamentally open. This transparency, and the ongoing human exchange of post-docs and graduate students, remains a stabilizing force and a check against sudden, isolated advances by any one nation.”

Murray noted that the endgame, if geopolitical paranoia continues unchecked, could echo mid-20th-century nuclear fissures: “The Manhattan Project saw the US cut out even its closest allies, creating inefficiency and ultimately greater international tension. Overly aggressive sovereignty pushes in AI could have similar consequences.”

Open Source: The Ultimate Competitive Lever

Both speakers highlighted open source as the wildcard in the future of AI control and competitiveness. Edelman spotlighted companies already migrating to open-source foundation models, noting a compelling anecdote: “A business leader told me his firm switched 90% of their inference workloads to open-source AI. It performed at 95% of what they needed for 5% of the cost.” The US government, he noted, has quietly recognized open-source AI as “worth having, even given national security concerns, because open models support competition and resilience.”

Murray reinforced this by noting the importance of avoiding vendor lock-in and encouraging a diverse, globally accessible AI ecosystem, grounded in collaborative innovation rather than monopolistic control.

Where Do We Go From Here? Advice for Technologists

The session closed with pragmatic advice. “First: Don’t judge AI by your first experience,” Edelman cautioned. “The technology is evolving at warp speed; what seemed disappointing a year ago can be remarkable now.” More critically, “the cost of custom engineering and advanced analytics, once the exclusive domain of Fortune 500s, is crashing toward zero. This radically opens opportunities for smaller firms. Use it. Try it. The risk is greater in staying out than jumping in.”

For those outside information industries, Murray’s reminder was that “AI is not just a service-sector story. Heavy industry, logistics, mining, any domain with vast data and complex assets, stands to gain massive productivity and insight from intelligent systems. The opportunities for improvement go far beyond what’s visible in office work alone.”

Conclusion: Navigating the Splintered AI Landscape

The contemporary AI world is defined as much by its boundaries as by its breakthroughs: boundaries of code, of law, of national power, and of practical control. The “ABC’s” are no longer academic, they’re at the core of how organizations, nations, and technologists must operate in the age of splintered AI. As Edelman put it, “If all AI progress stopped right now, we’d still have tens of trillions in economic opportunity to seize. The question is: who will move fastest and smartest to claim it?”

Leave a Reply

You must be logged in to post a comment.